Banning kids from social media doesn’t make online platforms safer. Here’s what will do that

- Written by Joel Scanlan, Senior Lecturer in Cybersecurity and Privacy, University of Tasmania

The tech industry’s unofficial motto for two decades was “move fast and break things”. It was a philosophy that broke more than just taxi monopolies[1] or hotel chains[2]. It also constructed a digital world filled with risks for our most vulnerable.

In the 2024–25 financial year alone, the Australian Centre to Counter Child Exploitation received nearly 83,000 reports[3] of online child sexual exploitation material (CSAM), primarily on mainstream platforms – a 41% increase from the year before.

Additionally, links between adolescent usage of social media and a range of harms have been found[4], such as adverse mental health outcomes, substance abuse and risky sexual behaviours. These findings represent the failure of a digital ecosystem built on profit[5] rather than protection.

With the federal government’s ban on social media accounts for under-16s taking effect this week, as well as age assurance[6] for logged-in search engine users on December 27[7] and adult content on March 9 2026[8], we have reached a landmark moment – but we must be clear about what this regulation achieves and what it ignores.

The ban may keep some children out (if they don’t circumvent it), but it does nothing to fix the harmful architecture awaiting them upon return. Nor does it take steps to modify the harmful behaviour of some adult users. We need meaningful change toward a digital duty of care[9], where platforms are legally required to anticipate and mitigate harm.

The need for safety by design

Currently, online safety often relies on a “whack-a-mole” approach: platforms wait for users to report harmful content, then moderators remove it. It is reactive, slow, and often traumatising for the human moderators[10] involved.

To truly fix this, we need safety by design. This principle demands that safety features be embedded in a platform’s core architecture. It moves beyond simply blocking access, to questioning why the platform allows harmful pathways to exist in the first place.

We are already seeing this when platforms with histories of harm[11] add new features – such as “trusted connections” on Roblox[12] that limits in-game connections only to people the child also knows in the real world. This feature should have existed from the start.

At the CSAM Deterrence Centre, led by Jesuit Social Service in partnership with the University of Tasmania, our research[13] challenges the industry narrative that safety is “too hard” or “too costly” to implement.

In fact, we have found that simple, well-designed interventions can disrupt harmful behaviours without breaking the user experience for everyone else.

Disrupting harm

One of our most significant findings comes from a partnership with one of the world’s largest adult sites, Pornhub. In the first publicly evaluated deterrence intervention, when a user searched for keywords associated with child abuse, they didn’t just hit a blank wall. They triggered a warning message and a chatbot directing the user to therapeutic help.

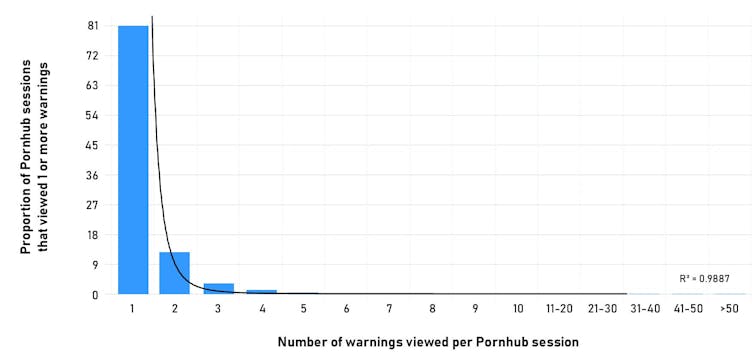

We observed a decrease in searches for illegal material, but also more than 80% of users who encountered this intervention did not attempt to search for that content on Pornhub again in that session[14].

This data, consistent with findings from three randomised control trials[15] we have undertaken on Australian males aged 18–40, proves that warning messages work.

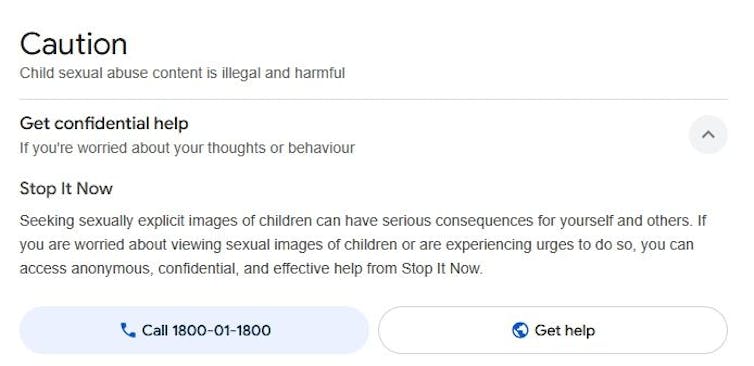

It is also in line with another finding: Jesuit Social Service’s Stop It Now (Australia)[16], which provides therapeutic services to those concerned about their feelings towards children, received a dramatic increase in web referrals after the warning message Google shows in search results for child abuse material was improved earlier this year.

By interrupting the user’s flow with a clear deterrent message, we can stop a harmful thought from becoming a harmful action. This is safety by design, using a platform’s own interface to protect the community.

Holding platforms responsible

This is why it’s so vital to include a digital duty of care in Australia’s online safety legislation[17], something the government committed to earlier this year.

Instead of users entering at their own risk, online platforms would be legally responsible for identifying and mitigating risks – such as algorithms that recommend harmful content or search functions that help users access illegal material.

Platforms can start making meaningful changes today by considering how their platforms could facilitate harm, and building in protections.

Examples include implementing grooming detection (enabling the automated detection of perpetrators trying to exploit children), blocking the sharing of known abuse imagery and videos and the links to websites that host such material, as well as proactively removing harm pathways that target the vulnerable – such as children online being able to interact with adults not known to them.

As our research shows, deterrence messaging plays a role too – displaying clear warnings when users search for harmful terms is highly effective. Tech companies should partner with researchers[18] and non-profit organisations to test what works, sharing data rather than hiding it.

The “move fast and break things” era is over. We need a cultural shift where safety online is treated as an essential feature, not an optional add-on. The technology to make these platforms safer already exists. And evidence shows that safety by design can have an impact. The only thing missing is the will to implement it.

References

- ^ taxi monopolies (www.abc.net.au)

- ^ hotel chains (www.library.hbs.edu)

- ^ nearly 83,000 reports (www.afp.gov.au)

- ^ a range of harms have been found (doi.org)

- ^ built on profit (theconversation.com)

- ^ age assurance (www.esafety.gov.au)

- ^ on December 27 (www.abc.net.au)

- ^ adult content on March 9 2026 (www.dundaslawyers.com.au)

- ^ a digital duty of care (minister.infrastructure.gov.au)

- ^ traumatising for the human moderators (theconversation.com)

- ^ platforms with histories of harm (theconversation.com)

- ^ “trusted connections” on Roblox (theconversation.com)

- ^ our research (www.csamdeterrence.com)

- ^ did not attempt to search for that content on Pornhub again in that session (www.iwf.org.uk)

- ^ randomised control trials (doi.org)

- ^ Stop It Now (Australia) (www.stopitnow.org.au)

- ^ in Australia’s online safety legislation (minister.infrastructure.gov.au)

- ^ should partner with researchers (www.csamdeterrence.com)