Australia has led the way regulating gene technology for over 20 years. Here’s how it should apply that to AI

- Written by Julia Powles, Associate Professor of Law and Technology; Director, UWA Tech & Policy Lab, Law School, The University of Western Australia

Since 2019, the Australian Department for Industry, Science and Resources has been striving to make the nation a leader in “safe and responsible” artificial intelligence[1] (AI). Key to this is a voluntary framework based on eight AI ethics principles[2], including “human-centred values”, “fairness” and “transparency and explainability”.

Every subsequent piece of national guidance on AI has spun off these eight principles, imploring business[3], government[4] and schools[5] to put them into practice. But these voluntary principles have no real hold on organisations that develop and deploy AI systems.

Last month, the Australian government started consulting on a proposal[6] that struck a different tone. Acknowledging “voluntary compliance […] is no longer enough”, it spoke of “mandatory guardrails for AI in high-risk settings[7]”.

But the core idea of self-regulation remains stubbornly baked in. For example, it’s up to AI developers to determine whether their AI system is high risk, by having regard to a set of risks that can only be described as endemic to large-scale AI systems[8].

If this high hurdle is met, what mandatory guardrails kick in? For the most part, companies simply need to demonstrate they have internal processes gesturing at the AI ethics principles. The proposal is most notable, then, for what it does not include. There is no oversight, no consequences, no refusal, no redress.

But there is a different, ready-to-hand model that Australia could adopt for AI. It comes from another critical technology in the national interest[9]: gene technology.

A different model

Gene technology is what’s behind genetically modified organisms. Like AI[10], it raises concerns[11] for more than 60%[12] of the population.

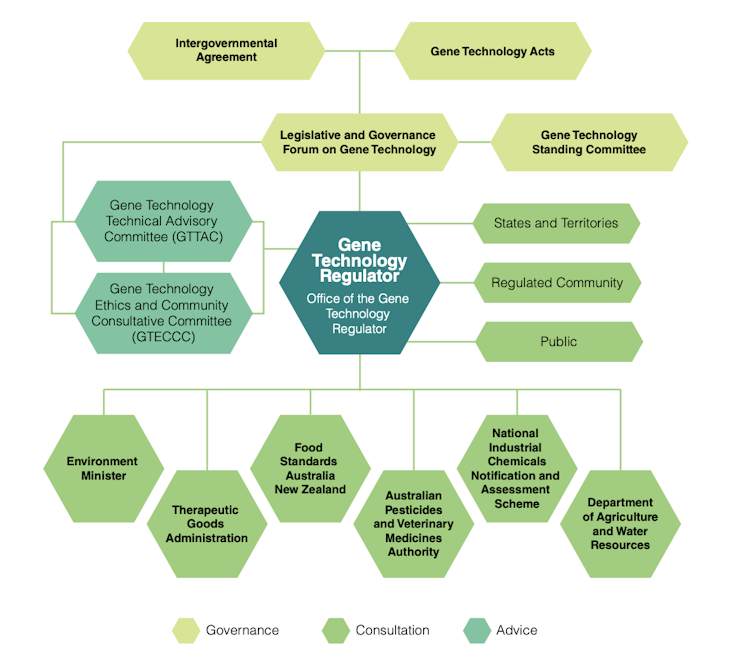

In Australia, it’s regulated by the Office of the Gene Technology Regulator[13]. The regulator was established in 2001 to meet the biotech boom in agriculture and health. Since then, it’s become the exemplar of an expert-informed, highly transparent[14] regulator focused on a specific technology with far-reaching consequences.

Three features have ensured the gene technology regulator’s national and international success[15].

First, it’s a single-mission body. It regulates[16] dealings with genetically modified organisms:

to protect the health and safety of people, and to protect the environment, by identifying risks posed by or as a result of gene technology.

Second, it has a sophisticated decision-making structure[17]. Thanks to it, the risk assessment of every application of gene technology in Australia is informed by sound expertise. It also insulates that assessment from political influence and corporate lobbying.

The regulator is informed by two integrated expert bodies: a Technical Advisory Committee[18] and an Ethics and Community Consultative Committee[19]. These bodies are complemented by Institutional Biosafety Committees[20] supporting ongoing risk management at more than 200 research and commercial institutions accredited[21] to use gene technology in Australia. This parallels[22] best practice in food safety[23] and drug safety[24].

Third, the regulator continuously[27] integrates public input[28] into its risk assessment process. It does so meaningfully and transparently. Every dealing with gene technology must be approved[29]. Before a release into the wild, an exhaustive consultation process maximises review and oversight. This ensures a high threshold of public safety.

Regulating high-risk technologies

Together, these factors explain why Australia’s gene technology regulator has been so successful. They also highlight what’s missing in most emerging approaches to AI regulation.

The mandate of AI regulation typically involves an impossible compromise between protecting the public and supporting industry. As with gene regulation, it seeks to safeguard against risks. In the case of AI, those risks would be to health, the environment and human rights. But it also seeks to “maximise the opportunities that AI presents for our economy and society[30]”.

Second, currently proposed AI regulation outsources risk assessment and management to commercial AI providers. Instead, it should develop a national evidence base, informed by cross-disciplinary scientific, socio-technical[31] and civil society[32] expertise.

The argument goes that AI is “out of the bag”, with potential applications too numerous and too mundane to regulate. Yet molecular biology methods are also well out of the bag. The gene tech regulator still maintains oversight of all uses of the technology, while continually working to categorise certain dealings as “exempt” or “low-risk” to facilitate research and development.

Third, the public has no meaningful opportunity to assent[33] to dealings with AI. This is true regardless of whether it involves plundering the archives of our collective imaginations[34] to build AI systems[35], or deploying them in ways that undercut dignity, autonomy and justice.

The lesson of more than two decades of gene regulation is that it doesn’t stop innovation to regulate a promising new technology until it can demonstrate a history of non-damaging use to people and the environment. In fact, it saves it.

References

- ^ safe and responsible” artificial intelligence (www.industry.gov.au)

- ^ eight AI ethics principles (www.industry.gov.au)

- ^ business (www.industry.gov.au)

- ^ government (www.finance.gov.au)

- ^ schools (www.education.gov.au)

- ^ proposal (consult.industry.gov.au)

- ^ mandatory guardrails for AI in high-risk settings (consult.industry.gov.au)

- ^ endemic to large-scale AI systems (dl.acm.org)

- ^ critical technology in the national interest (www.industry.gov.au)

- ^ Like AI (ai.uq.edu.au)

- ^ concerns (theconversation.com)

- ^ more than 60% (www.ogtr.gov.au)

- ^ Office of the Gene Technology Regulator (www.ogtr.gov.au)

- ^ highly transparent (www.ogtr.gov.au)

- ^ national and international success (www.ncbi.nlm.nih.gov)

- ^ regulates (www.legislation.gov.au)

- ^ decision-making structure (www.genetechnology.gov.au)

- ^ Technical Advisory Committee (www.ogtr.gov.au)

- ^ Ethics and Community Consultative Committee (www.ogtr.gov.au)

- ^ Institutional Biosafety Committees (www.ogtr.gov.au)

- ^ accredited (www.ogtr.gov.au)

- ^ parallels (theconversation.com)

- ^ food safety (www.foodstandards.gov.au)

- ^ drug safety (www.tga.gov.au)

- ^ Office of The Gene Technology Regulator (www.genetechnology.gov.au)

- ^ CC BY (creativecommons.org)

- ^ continuously (www.ogtr.gov.au)

- ^ public input (www.ogtr.gov.au)

- ^ must be approved (www.ogtr.gov.au)

- ^ maximise the opportunities that AI presents for our economy and society (www.industry.gov.au)

- ^ socio-technical (datasociety.net)

- ^ civil society (www.thebritishacademy.ac.uk)

- ^ opportunity to assent (onezero.medium.com)

- ^ plundering the archives of our collective imaginations (iapp.org)

- ^ build AI systems (papers.ssrn.com)