Why the feds are investigating Tesla's Autopilot and what that means for the future of self-driving cars

- Written by Hayder Radha, Professor of Electrical and Computer Engineering, Michigan State University

It’s hard to miss the flashing lights of fire engines, ambulances and police cars ahead of you as you’re driving down the road. But in at least 11 cases in the past three and a half years, Tesla’s Autopilot advanced driver-assistance system did just that. This led to 11 accidents in which Teslas crashed into emergency vehicles or other vehicles at those scenes, resulting in 17 injuries and one death[1].

The National Highway Transportation Safety Administration has launched an investigation[2] into Tesla’s Autopilot system in response to the crashes. The incidents took place between January 2018 and July 2021 in Arizona, California, Connecticut, Florida[3], Indiana, Massachusetts, Michigan, North Carolina and Texas. The probe covers 765,000 Tesla cars[4] – that’s virtually every car the company has made in the last seven years. It’s also not the first time[5] the federal government has investigated Tesla’s Autopilot.

As a researcher who studies autonomous vehicles[6], I believe the investigation will put pressure on Tesla to reevaluate the technologies the company uses in Autopilot and could influence the future of driver-assistance systems and autonomous vehicles.

How Tesla’s Autopilot works

Tesla’s Autopilot[7] uses cameras, radar and ultrasonic sensors to support two major features: Traffic-Aware Cruise Control and Autosteer.

Traffic-Aware Cruise Control, also known as adaptive cruise control, maintains a safe distance between the car and other vehicles that are driving ahead of it. This technology primarily uses cameras in conjunction with artificial intelligence algorithms to detect surrounding objects such as vehicles, pedestrians and cyclists, and estimate their distances. Autosteer uses cameras to detect clearly marked lines on the road to keep the vehicle within its lane.

In addition to its Autopilot capabilities, Tesla has been offering what it calls “full self-driving” features that include autopark[8] and auto lane change[9]. Since its first offering of the Autopilot system and other self-driving features, Tesla has consistently warned users that these technologies require active driver supervision and that these features do not make the vehicle autonomous.

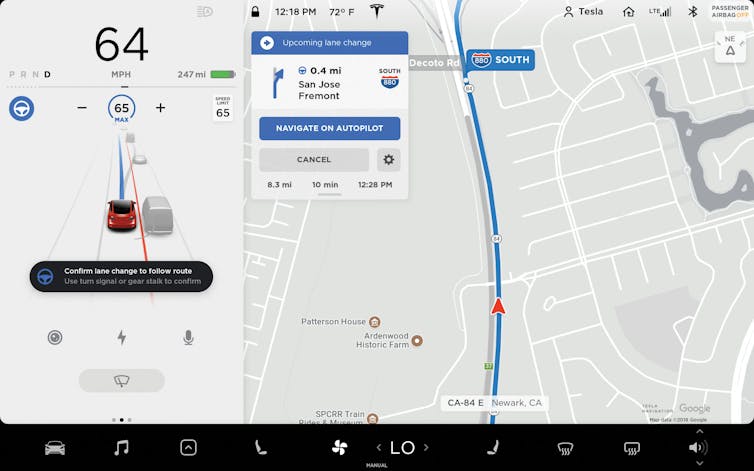

Tesla’s Autopilot display shows the driver where the car thinks it is in relation to the road and other vehicles.

Rosenfeld Media/Flickr, CC BY[10][11]

Tesla’s Autopilot display shows the driver where the car thinks it is in relation to the road and other vehicles.

Rosenfeld Media/Flickr, CC BY[10][11]

Tesla is beefing up the AI technology that underpins Autopilot. The company announced on Aug. 19, 2021, that it is building a supercomputer using custom chips[12]. The supercomputer will help train Tesla’s AI system to recognize objects seen in video feeds collected by cameras in the company’s cars.

Autopilot does not equal autonomous

Advanced driver-assistance systems have been supported on a wide range of vehicles for many decades. The Society of Automobile Engineers divides the degree of a vehicle’s automation into six levels[13], starting from Level 0, with no automated driving features, to Level 5, which represents full autonomous driving with no need for human intervention.

Within these six levels of autonomy, there is a clear and vivid divide between Level 2 and Level 3. In principle, at Levels 0, 1 and 2, the vehicle should be primarily controlled by a human driver, with some assistance from driver-assistance systems. At Levels 3, 4 and 5, the vehicle’s AI components and related driver-assistance technologies are the primary controller of the vehicle. For example, Waymo’s self-driving taxis[14], which operate in the Phoenix area, are Level 4, which means they operate without human drivers but only under certain weather and traffic conditions.

News coverage of a Tesla driving in Autopilot mode that crashed into the back of a stationary police car.Tesla Autopilot is considered a Level 2 system, and hence the primary controller of the vehicle should be a human driver. This provides a partial explanation for the incidents cited by the federal investigation. Though Tesla says it expects drivers to be alert at all times when using the Autopilot features, some drivers treat the Autopilot as having autonomous driving capability with little or no need for human monitoring or intervention. This discrepancy between Tesla’s instructions and driver behavior[15] seems to be a factor in the incidents under investigation.

Another possible factor is how Tesla assures that drivers are paying attention. Earlier versions of Tesla’s Autopilot were ineffective in monitoring driver attention[16] and engagement level when the system is on. The company instead relied on requiring drivers to periodically move the steering wheel, which can be done without watching the road. Tesla recently announced that it has begun using internal cameras to monitor drivers’ attention[17] and alert drivers when they are inattentive.

Another equally important factor contributing to Tesla’s vehicle crashes is the company’s choice of sensor technologies. Tesla has consistently avoided the use of lidar[18]. In simple terms, lidar is like radar[19] but with lasers instead of radio waves. It’s capable of precisely detecting objects and estimating their distances. Virtually all major companies working on autonomous vehicles, including Waymo, Cruise, Volvo, Mercedes, Ford and GM, are using lidar as an essential technology for enabling automated vehicles to perceive their environments.

By relying on cameras, Tesla’s Autopilot is prone to potential failures caused by challenging lighting conditions, such as glare and darkness. In its announcement of the Tesla investigation, the NHTSA reported that most incidents occurred after dark where there were flashing emergency vehicle lights, flares or other lights. Lidar, in contrast, can operate under any lighting conditions and can “see” in the dark.

Fallout from the investigation

The preliminary evaluation will determine whether the NHTSA should proceed with an engineering analysis, which could lead to a recall. The investigation could eventually lead to changes in future Tesla Autopilot and its other self-driving system. The investigation might also indirectly have a broader impact on the deployment of future autonomous vehicles; in particular, the investigation may reinforce the need for lidar.

Although reports in May 2021 indicated that Tesla was testing lidar sensors[20], it’s not clear whether the company was quietly considering the technology or using it to validate their existing sensor systems. Tesla CEO Elon Musk called lidar “a fool’s errand[21]” in 2019, saying it’s expensive and unnecessary.

However, just as Tesla is revisiting systems that monitor driver attention, the NHTSA investigation could push the company to consider adding lidar or similar technologies to future vehicles.

[You’re smart and curious about the world. So are The Conversation’s authors and editors. You can get our highlights each weekend[22].]

References

- ^ 11 accidents in which Teslas crashed into emergency vehicles or other vehicles at those scenes, resulting in 17 injuries and one death (static.nhtsa.gov)

- ^ launched an investigation (www.caranddriver.com)

- ^ Florida (www.nytimes.com)

- ^ covers 765,000 Tesla cars (www.motortrend.com)

- ^ not the first time (www.theverge.com)

- ^ researcher who studies autonomous vehicles (scholar.google.com)

- ^ Tesla’s Autopilot (www.tesla.com)

- ^ autopark (www.youtube.com)

- ^ auto lane change (www.youtube.com)

- ^ Rosenfeld Media/Flickr (flickr.com)

- ^ CC BY (creativecommons.org)

- ^ building a supercomputer using custom chips (www.cnbc.com)

- ^ six levels (www.sae.org)

- ^ self-driving taxis (theconversation.com)

- ^ driver behavior (doi.org)

- ^ were ineffective in monitoring driver attention (www.wsj.com)

- ^ internal cameras to monitor drivers’ attention (www.cnbc.com)

- ^ avoided the use of lidar (venturebeat.com)

- ^ lidar is like radar (www.autoweek.com)

- ^ Tesla was testing lidar sensors (www.bloomberg.com)

- ^ a fool’s errand (techcrunch.com)

- ^ You can get our highlights each weekend (theconversation.com)